bagging machine learning ensemble

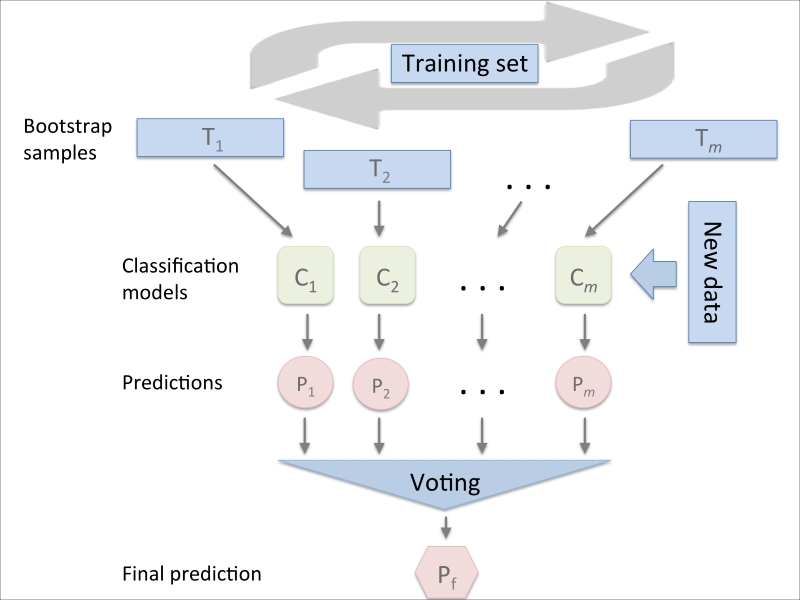

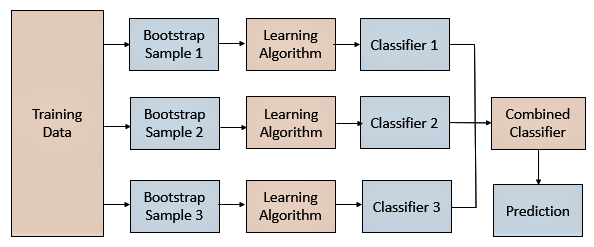

Bootstrap Aggregating also known as bagging is a machine learning ensemble meta-algorithm designed to improve the stability and accuracy of machine learning. Machine learning is a sub-part of Artificial Intelligence that gives power to models to learn on their own by using algorithms and models without being explicitly designed by.

A Practical Tutorial On Bagging And Boosting Based Ensembles For Machine Learning Algorithms Software Tools Performance Study Practical Perspectives And Opportunities Sciencedirect

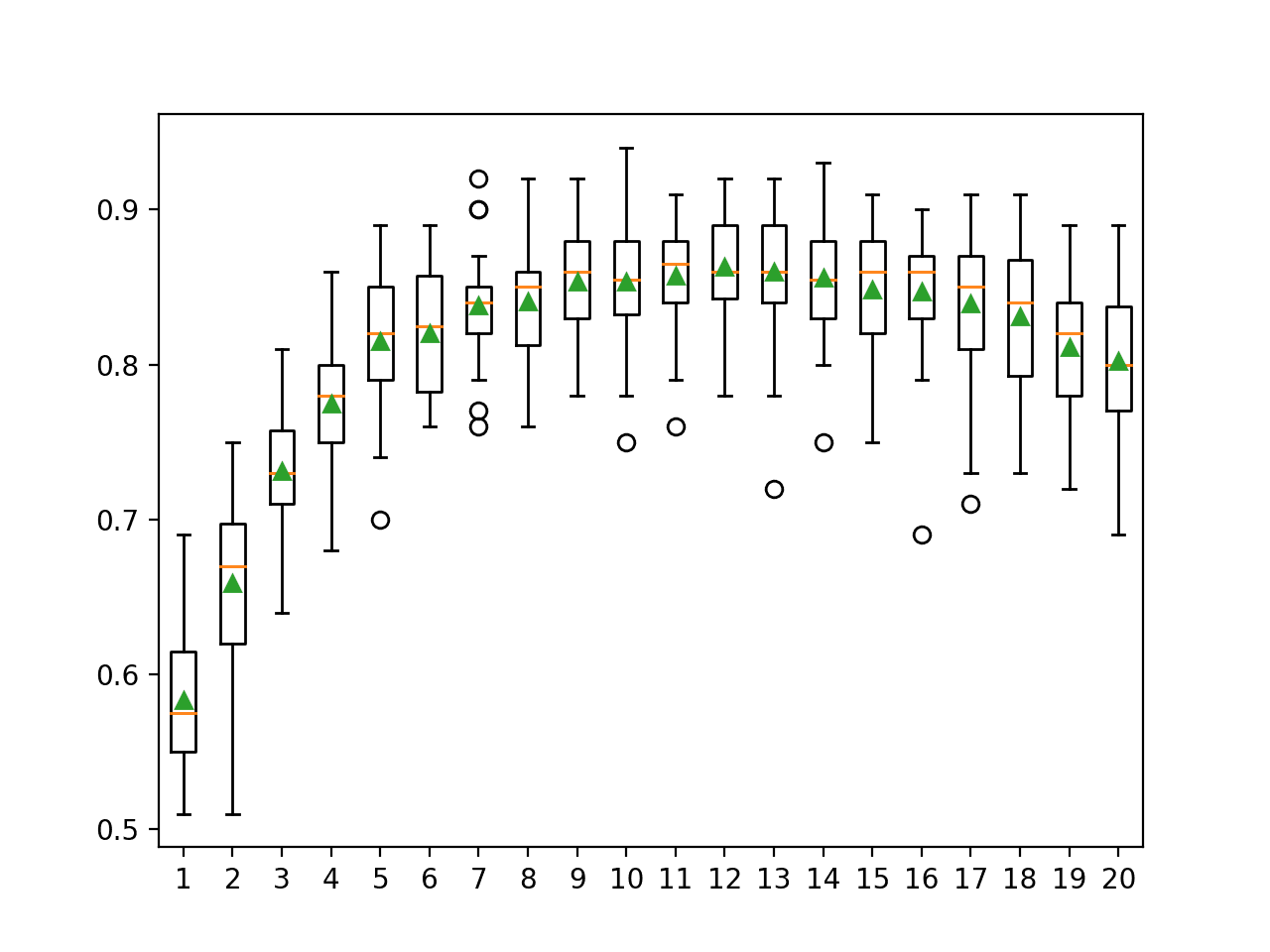

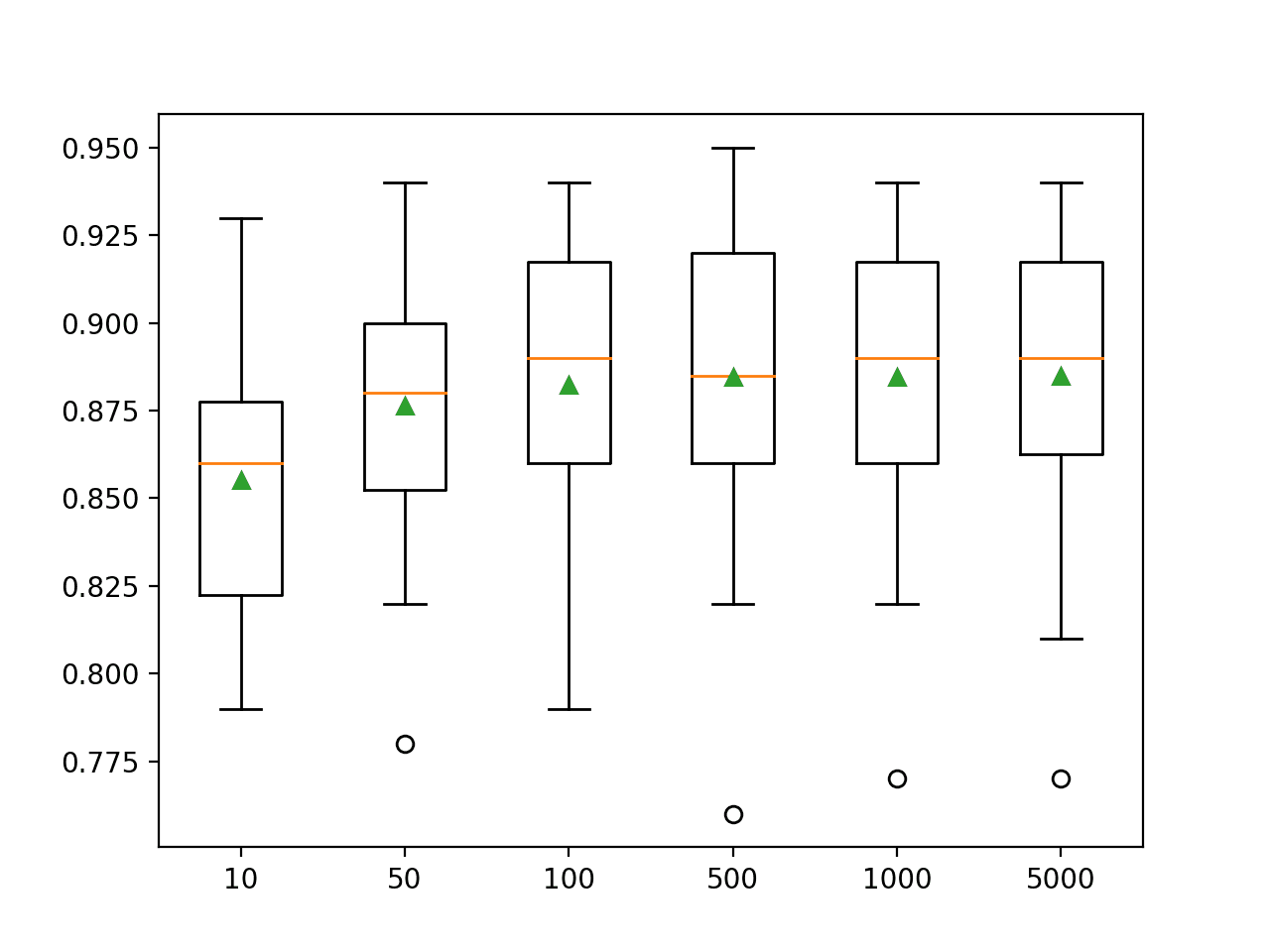

The bias-variance trade-off is a challenge we all face while training machine learning algorithms.

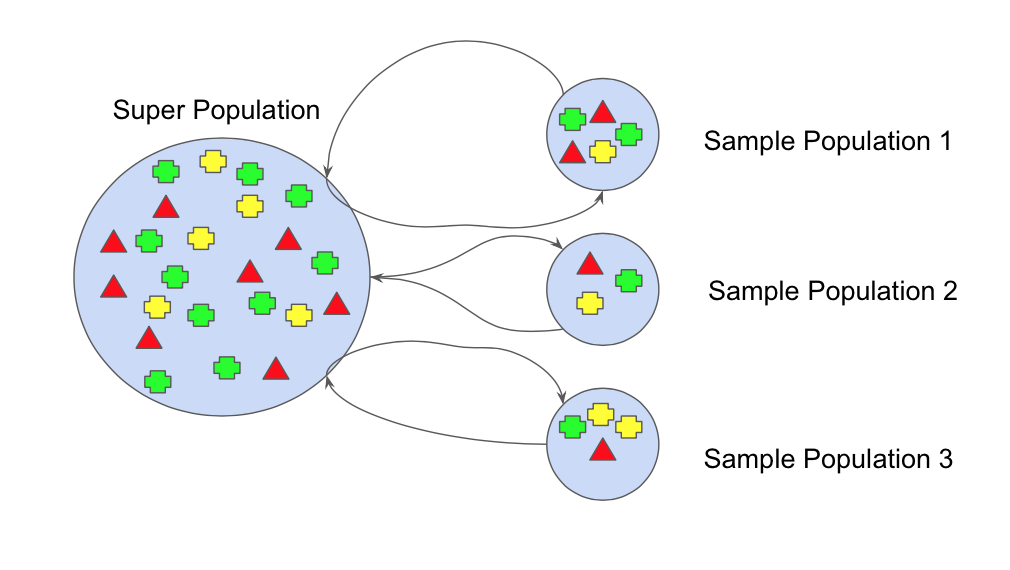

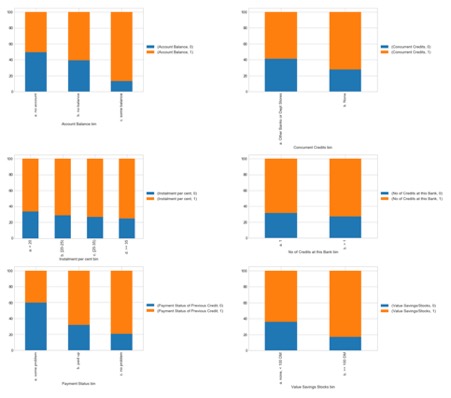

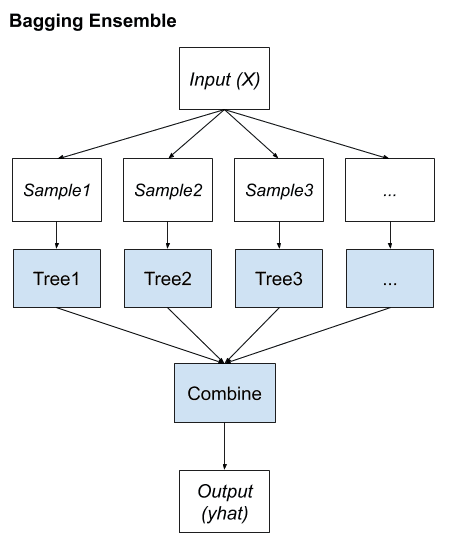

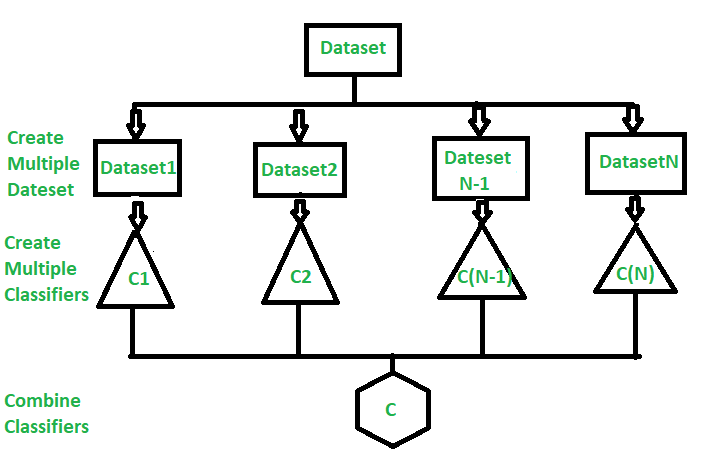

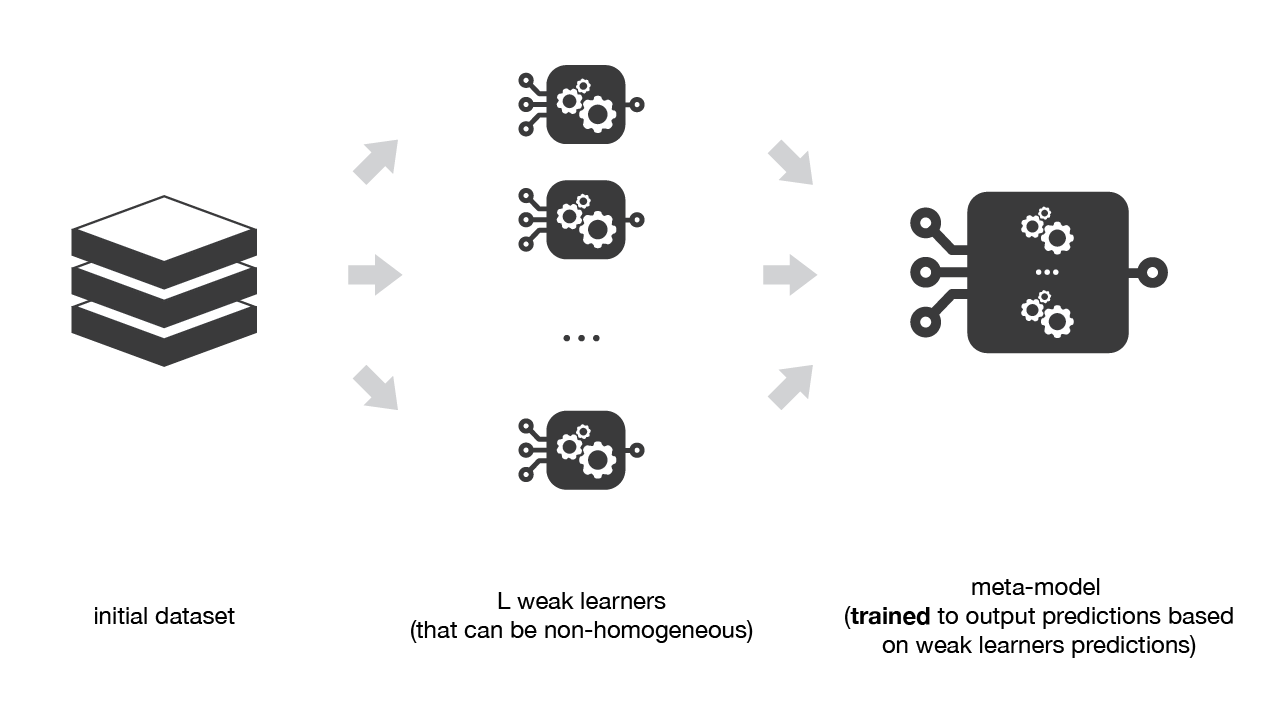

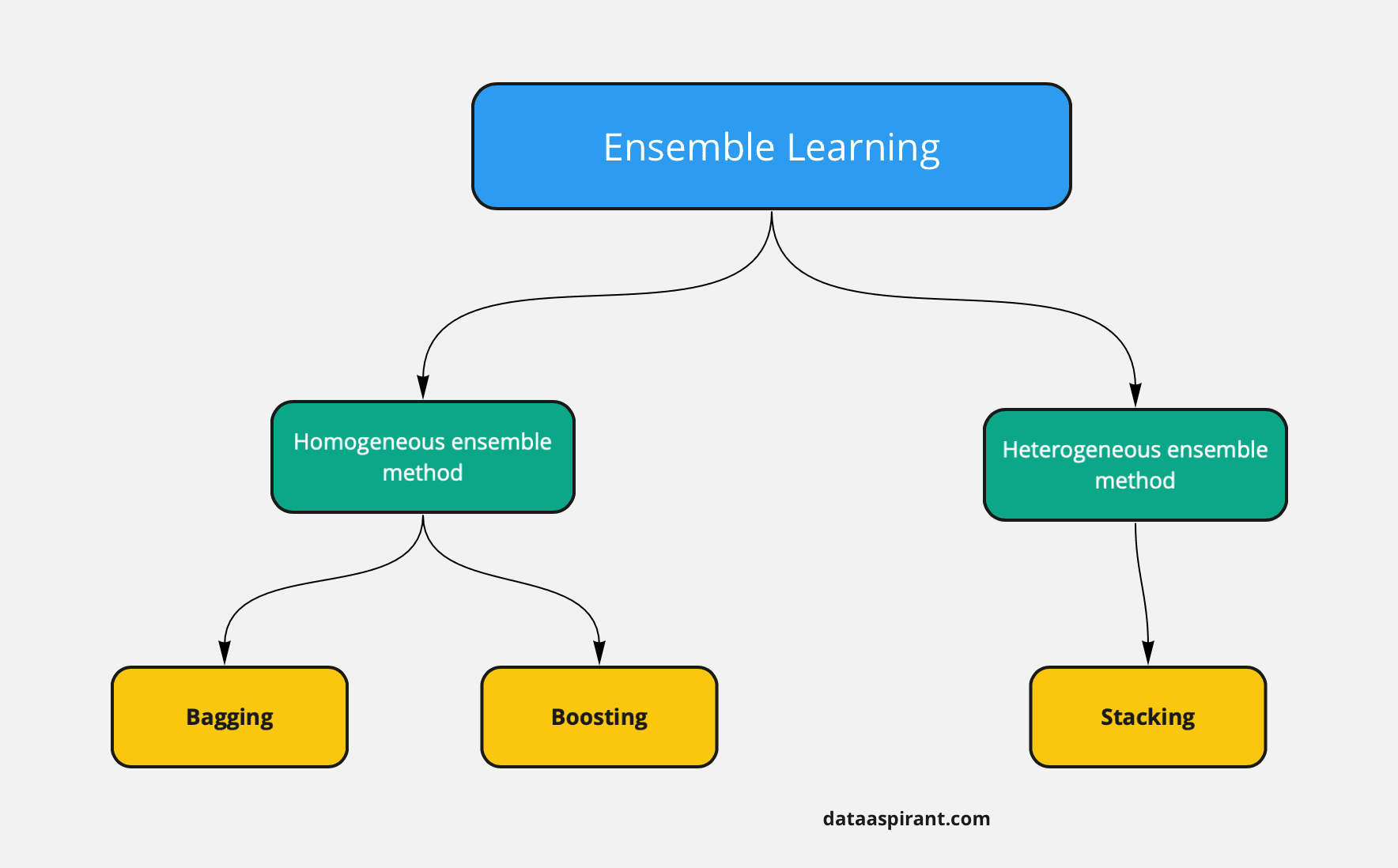

. Ensemble learning is all about using multiple models to combine their prediction power to get better predictions that has low variance. Bootstrap aggregating also called bagging from bootstrap aggregating is a machine learning ensemble meta-algorithm designed to improve the stability and accuracy of machine learning. Ensemble learning in ML aggregates the results of different ML classifications aimed at achieving better performances in accuracy and attack classification.

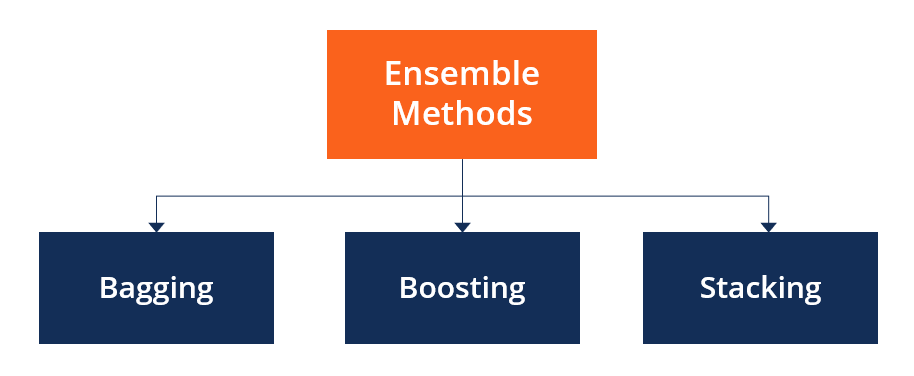

The original ensemble method is Bayesian averaging but more recent algorithms include error-correcting output coding Bagging and boosting. The general idea is that a team of models is able to increase the. Myself Shridhar Mankar a Engineer l YouTuber l Educational Blogger l Educator l Podcaster.

A Bagging classifier is an ensemble meta-estimator that fits base classifiers each on random subsets of the original dataset and then aggregate their individual. A Bagging classifier is an ensemble meta-estimator that fits base classifiers each on random subsets of the original dataset and then aggregate their individual predictions either by voting. These are built with a given learning algorithm in order to.

Bagging and Boosting are ensemble methods focused on getting N learners from a single learner. Bagging the short form for bootstrap aggregating is mainly applied in classification and regression. Roughly ensemble learning methods that often trust the top rankings of many machine learning competitions including Kaggles competitions are based on the.

Bagging is a powerful ensemble method which helps to reduce variance and by extension. My Aim- To Make Engineering Students Life EASYWebsite - https. In machine learning classification problems the simplest example of an ensemble is a majority committee.

Bagging and boosting. Main Types of Ensemble Methods. Bagging and Boosting make random sampling and generate several training.

Ensemble models are a particular kind of machine learning model that mixes several models together. The general principle of an ensemble method in Machine Learning to combine the predictions of several models. Mode here is the value that occurs.

This paper reviews these.

How To Develop A Bagging Ensemble With Python

Ensemble Learning Bagging And Boosting By Jinde Shubham Becoming Human Artificial Intelligence Magazine

Ensemble Methods Overview Categories Main Types

A Primer To Ensemble Learning Bagging And Boosting

Bagging Building An Ensemble Of Classifiers From Bootstrap Samples Python Machine Learning Book

Ensemble Machine Learning Github Topics Github

Pdf Comparison Of Bagging And Boosting Ensemble Machine Learning Methods For Automated Emg Signal Classification Semantic Scholar

A Gentle Introduction To Ensemble Learning Algorithms

What Is The Difference Between Bagging And Boosting Methods In Ensemble Learning Youtube

Ensemble Learning Bagging Boosting By Fernando Lopez Towards Data Science

Ensemble Classifier Data Mining Geeksforgeeks

Ensemble Stacking For Machine Learning And Deep Learning

Bagging Bootstrap Aggregation Overview How It Works Advantages

Ensemble Methods Bagging Boosting And Stacking By Joseph Rocca Towards Data Science

Ensemble Methods Bagging Vs Boosting Difference

Bagging Vs Boosting In Machine Learning Geeksforgeeks

Learn Ensemble Learning Algorithms Machine Learning Jc Chouinard